Artificial Intelligence has long captured the imagination of scientists, engineers, and the general public alike.

From optimistic predictions of intelligent machines that can walk, talk, and think like humans to the more recent advancements in deep learning, AI has seen its fair share of peaks and valleys.

However, amidst the excitement and hype, it’s essential to critically examine the progress and challenges in the field.

In this blog post, we delve into a thought-provoking blog post that explores the fallacies surrounding AI, shedding light on why achieving human-like intelligence in machines proves to be more challenging than anticipated.

Let’s embark on a journey through the complexities of AI research, starting with a reflection on past predictions and the evolution of the field.

Table of Contents

The Journey of AI: From Spring to Winter

The history of Artificial Intelligence is marked by a series of advances and setbacks, similar to the changing seasons.

In its early stages, AI pioneers painted a picture of limitless possibilities, envisioning machines that could rival human intelligence in its entirety.

The 1958 demonstration of Frank Rosenblatt’s perceptron by the US Navy sparked optimism about AI’s potential to revolutionize various fields. However, these early dreams were tempered by the sobering realities uncovered in the subsequent years.

As the 1960s progressed, Herbert Simon, Claude Shannon, and Marvin Minsky made bold predictions about AI’s future, foreseeing a world where machines could rival human capabilities.

The optimism of this AI “Spring” era gave birth to ambitious projects like Japan’s Fifth Generation and the US’s Strategic Computing Initiative, fueled by hopes of achieving general AI. Yet, by the late 1980s, the initial passion diminished as reality failed to meet expectations.

Expert systems, neural networks, and many other AI endeavors encountered roadblocks, revealing the limitations of existing approaches.

The field jumped into an “AI Winter,” characterized by declining funding and skepticism. Even obtaining a PhD in AI during this period came with warnings against using the term “artificial intelligence” on job applications.

Despite the setbacks, the 1990s witnessed the rise of machine learning, offering new avenues for exploration. The emergence of deep learning in the 2010s relighted optimism, propelling AI to the forefront once again.

Deep neural networks, fueled by vast datasets and computational power, achieved remarkable feats in speech recognition, image classification, and more.

Yet, beneath the surface, cracks in the facade of deep learning’s intelligence began to emerge.

Brittle systems, vulnerable to adversarial attacks and lacking in true understanding, raised questions about the path to genuine artificial intelligence.

As the field deals with these challenges, the cycle of AI’s “Springs and Winters” continues, shaping the narrative of progress and prompting deeper reflections on the nature of intelligence.

Debunking Common Misconceptions in AI

Here are the four key misconceptions unraveled, shedding light on the complexities of artificial intelligence.

Fallacy 1: The First-Step Fallacy

Many believe that progress in specific AI tasks, like chess or language generation, brings us closer to achieving general AI.

However, this assumption, known as the “first-step fallacy,” overlooks the vast gap between narrow and general intelligence. Just because a machine excels in one area doesn’t mean it’s closer to human-like understanding.

Comparing specific achievements in AI to milestones on the path to general intelligence is similar to equating a monkey climbing a tree with reaching the moon.

The challenge lies in bridging the divide between specialized abilities and broader cognitive capabilities, particularly in areas like common sense reasoning.

Fallacy 2: Easy Things vs. Hard Things

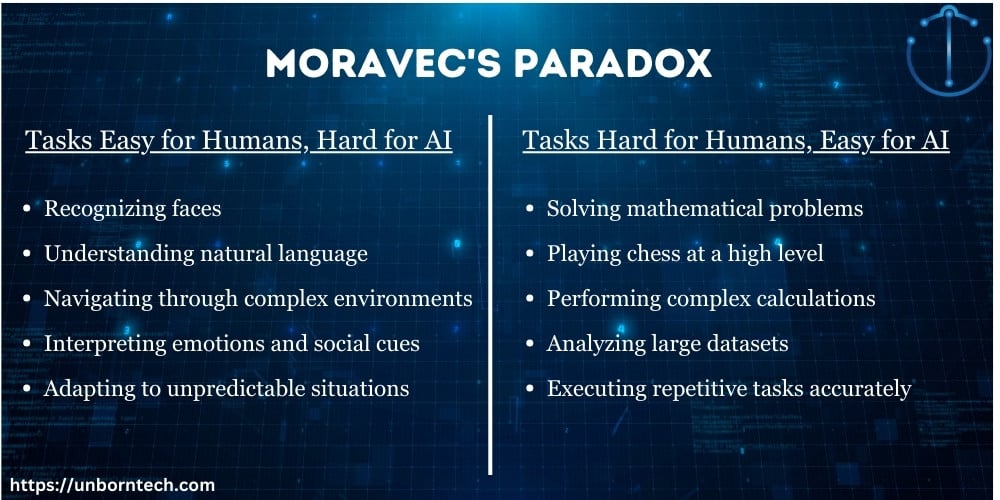

Contrary to popular belief, tasks that humans find effortless, such as understanding natural language or navigating social interactions, are often the most challenging for AI systems.

Meanwhile, tasks like complex calculations or game playing, which humans may find difficult, are often simpler for AI.

This disparity, known as Moravec’s paradox, highlights the limitations of current AI approaches and underscores the importance of acknowledging the complexity of human intelligence beyond mere computational power.

Fallacy 3: The Lure of Wishful Mnemonics

AI terminology often employs human-like terms, such as “understanding” or “learning,” which can mislead us into overestimating the capabilities of AI systems.

While machines may show behaviors similar to human intelligence, they lack the true understanding and adaptability inherent in human cognition.

Recognizing this disparity is essential to avoid exaggerated expectations and accurately assess the limitations of current AI technologies.

Fallacy 4: Intelligence Is All in the Brain

The notion that intelligence resides solely within the brain overlooks the critical role of the body and environment in shaping cognition.

While AI models may mimic certain aspects of neural processing, they lack the embodied experience and sensory-motor interactions that underpin human intelligence.

Intelligence arises from the intricate interplay between the brain, body, and environment, challenging the notion of disembodied AI.

Understanding intelligence requires acknowledging its embodiment and the interconnectedness of sensory, motor, and cognitive systems, challenging the assumption of a purely brainy basis for intelligence.

Conclusion

As we strive to advance artificial intelligence, it’s essential to address the misconceptions highlighted in this discussion.

By reevaluating our understanding of AI progress, acknowledging the challenges posed by human-like intelligence, and embracing interdisciplinary collaboration, we can chart a more informed path forward.

Ultimately, developing a nuanced vocabulary for describing AI capabilities and deepening our scientific understanding of intelligence will be crucial for navigating the complexities of AI research and innovation.

Note for readers: This blog post is based on the insightful research article titled “Why AI is Harder Than We Think” authored by Melanie Mitchell, a distinguished American scientist, in 2021.

Frequently Asked Questions (FAQs)

Why is artificial intelligence so difficult?

AI is challenging because it involves replicating complex human cognitive abilities, which are deeply intertwined with perception, action, and social interaction. Additionally, AI must contend with inherent limitations in computational power, data availability, and the understanding of human cognition.

Is AI more powerful than the human brain?

No, AI is not currently more powerful than the human brain. While AI excels at specific tasks, it lacks the holistic capabilities, adaptability, and nuanced understanding that characterize human intelligence.

Can AI actually think?

AI systems can simulate aspects of human thinking, such as problem-solving, pattern recognition, and decision-making. However, their “thinking” is fundamentally different from human cognition, as it relies on algorithms, statistical models, and computational processes rather than consciousness, emotions, and subjective experiences.

What is the biggest problem in AI?

One of the biggest challenges in AI is developing systems that exhibit common sense understanding and adaptability in diverse real-world situations. Additionally, ensuring AI systems are ethical, unbiased, and aligned with human values presents significant challenges for researchers and developers.

What are the 4 fallacies in our understanding of AI?

The 4 fallacies are:

1. Assuming narrow intelligence is on a continuum with general intelligence.

2. Believing that easy things for humans are also easy for AI, and vice versa.

3. Succumbing to wishful mnemonics, where AI terminology may inaccurately reflect human-like abilities.

4. Assuming intelligence is solely contained within the brain, disregarding the role of the body and embodiment.

How can we improve our understanding of intelligence in AI systems?

By fostering interdisciplinary collaborations, embracing embodied cognition principles, and acknowledging the limitations of current AI approaches.