In an era where artificial intelligence is increasingly integrated into various aspects of our lives, from healthcare to finance, it’s imperative to establish comprehensive policies that govern its development, deployment, and impact.

Crafting effective AI policies requires a delicate balance between fostering innovation and ensuring ethical, transparent, and accountable use of AI technologies.

In this blog post, we’ll explore the essential components of a good AI policy and why they are crucial for shaping a responsible AI ecosystem.

Table of Contents

Defining AI Policy

Before Delving into the discussion of the components of a good AI policy, let’s first understand what it is.

AI policy refers to a set of guidelines, regulations, and frameworks established by governments, organizations, and stakeholders to govern the development, deployment, and use of AI technologies.

These policies aim to address ethical, legal, societal, and technical considerations associated with AI to ensure its responsible and beneficial integration into various sectors.

10 Major Components Of A Good AI Policy

Here are the 10 Major Components Of A Good AI Policy that provide a comprehensive framework for ethical, responsible, and beneficial development, deployment, and governance of artificial intelligence technologies.

1. Ethical Guidelines

Ethical considerations lie at the heart of AI policy frameworks. Establishing clear ethical guidelines helps ensure that AI technologies are developed and used in a manner consistent with societal values and principles.

These guidelines should address issues such as fairness, transparency, accountability, and privacy.

For instance, the European Union’s General Data Protection Regulation (GDPR) sets stringent standards for data protection and privacy, offering a robust framework for AI governance.

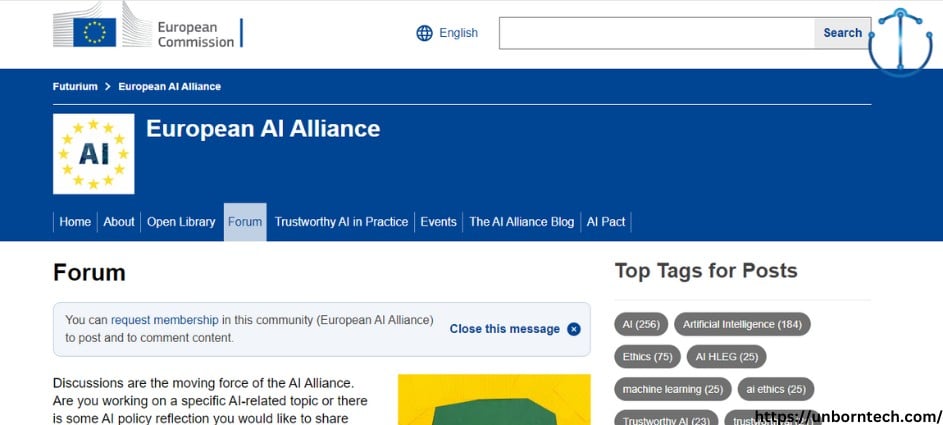

2. Regulatory Frameworks

Robust regulatory frameworks are essential for overseeing the development, deployment, and operation of AI systems. Governments play a crucial role in enacting laws and regulations that govern AI, addressing concerns related to safety, security, and liability.

For instance, the United States has established the National Artificial Intelligence Research Resource (NAIRR) Task Force to coordinate federal AI research activities and develop strategic plans for AI governance.

3. Transparency and Explainability

Transparency in AI systems ensures that users understand how algorithms make decisions and take actions.

Explainability, on the other hand, enables stakeholders to interpret and challenge AI outcomes.

The Singapore government’s Model AI Governance Framework emphasizes the importance of transparency and explainability to build trust and accountability in AI systems.

4. Accountability and Liability

AI policies should establish mechanisms to hold developers, deployers, and users accountable for the outcomes of AI systems. This includes defining liability in cases of harm or discrimination caused by AI algorithms.

The OECD’s AI Principles highlight the importance of accountability and the need for mechanisms to address the adverse impacts of AI technologies.

5. Data Governance

Data is the lifeblood of AI systems, making data governance and privacy protection critical components of AI policies.

Regulations such as the California Consumer Privacy Act (CCPA) and the GDPR set standards for data collection, processing, and sharing in AI applications.

6. Risk Management and Mitigation

AI policies should incorporate mechanisms for identifying, assessing, and mitigating potential risks associated with AI deployment. This includes addressing risks related to bias, discrimination, security vulnerabilities, and unintended consequences.

Collaborative initiatives such as the Partnership on AI (PAI) bring together industry stakeholders, researchers, and policymakers to develop best practices for AI risk management and mitigation.

7. Human Rights and Socioeconomic Impacts

AI policies should consider the broader societal impacts of AI technologies on human rights, labor markets, and socioeconomic inequalities.

The UNESCO Recommendation on the Ethics of Artificial Intelligence emphasizes the importance of respecting human rights and addressing inequalities in AI development and deployment.

8. Public Engagement and Stakeholder Participation

Effective AI policies should involve meaningful public engagement and stakeholder participation to ensure that diverse perspectives and concerns are taken into account.

This may involve consultation with civil society organizations, industry stakeholders, academia, and affected communities throughout the policymaking process.

Platforms for public dialogue, such as public hearings, consultations, and citizen assemblies, can facilitate transparency, accountability, and legitimacy in AI governance.

For example, the European Commission’s AI Alliance provides a forum for stakeholders to share their views and contribute to the development of AI policies at the European level.

9. Education and Workforce Development

To maximize the benefits of AI and mitigate its disruptive impacts, AI policies should prioritize education and workforce development initiatives.

This includes promoting STEM (Science, Technology, Engineering, and Mathematics) education, reskilling and upskilling programs, and fostering interdisciplinary collaboration.

Countries like Finland have implemented comprehensive national AI strategies that emphasize the importance of education and lifelong learning in preparing individuals for the AI-driven future.

10. International Collaboration

Given the global nature of AI development and deployment, collaboration between countries and international organizations is essential to address cross-border challenges and harmonize regulatory standards.

Initiatives such as the OECD AI Principles and the UNESCO Recommendation on the Ethics of AI advocate for international cooperation and dialogue to promote responsible AI governance on a global scale.

Why the Sudden Interest in AI Policy?

The burgeoning interest in AI policy stems from a growing recognition of the profound impact that AI technologies can have on individuals, communities, and societies at large.

While AI holds immense promise in enhancing efficiency, productivity, and innovation, its unchecked deployment can also give rise to significant ethical dilemmas and societal risks.

Several mishaps and ethical issues have underscored the urgent need for robust AI policies.

A few of the prominent ones are as follows:

Algorithmic Bias and Discrimination

AI algorithms are susceptible to bias, reflecting the inherent biases present in the data used for training. This can lead to discriminatory outcomes, such as biased hiring practices or disproportionate targeting in law enforcement.

For instance, Amazon’s AI recruiting tool was found to exhibit gender bias, favoring male candidates over female candidates, highlighting the need for algorithmic fairness and accountability.

Privacy Breaches and Data Misuse

AI applications often rely on vast amounts of personal data, raising concerns about privacy infringement and data misuse. Inadequate data protection measures can lead to unauthorized access, data breaches, and exploitation of sensitive information.

Notable cases include the Cambridge Analytica scandal, where personal data from millions of Facebook users was harvested without their consent for political profiling and manipulation.

Autonomous Vehicle Accidents

The development of autonomous vehicles has raised complex ethical and legal questions surrounding liability and safety.

High-profile accidents involving self-driving cars, such as the Uber autonomous vehicle fatality in 2018, have highlighted the need for clear regulations and safety standards to govern the deployment of AI-powered transportation systems.

Deepfake Manipulation

The proliferation of deepfake technology, which uses AI to create highly realistic but fabricated images and videos, poses significant threats to misinformation, identity theft, and privacy infringement.

Deepfake manipulation has been exploited for malicious purposes, including spreading false information and manipulating public opinion, emphasizing the importance of combating disinformation and preserving digital authenticity.

For example, In a recent incident, a finance worker at a multinational firm was defrauded of $25 million in a scam involving deepfake technology.

Healthcare AI Misdiagnosis

AI-based diagnostic systems in healthcare hold promise for improving patient outcomes and streamlining medical processes. However, instances of AI misdiagnosis or erroneous recommendations underscore the importance of rigorous testing, validation, and regulatory oversight to ensure the safety and efficacy of AI-driven healthcare solutions.

One such incident involved Google’s Verily Health Sciences, whose AI system for diagnosing retinopathy faced challenges during field trials in Thailand.

Conclusion

In conclusion, a good AI policy integrates ethical principles, regulatory frameworks, transparency, accountability, data governance, bias mitigation, human rights considerations, and international collaboration.

By addressing these components comprehensively, policymakers can foster innovation while ensuring that AI technologies serve the common good and uphold fundamental values of fairness, transparency, and accountability.

As AI continues to evolve, it’s essential to adapt and refine AI policies to address emerging challenges and opportunities in the AI landscape.

Frequently Asked Questions (FAQs)

Why is AI policy necessary?

AI policy is necessary to establish guidelines and regulations that govern the development, deployment, and impact of artificial intelligence technologies, ensuring their ethical, responsible, and beneficial use.

How can AI policies ensure fairness and mitigate bias in AI algorithms?

AI policies can ensure fairness and mitigate bias in AI algorithms by promoting diverse and representative training data sets, implementing bias detection and mitigation algorithms, and conducting regular audits of AI systems for fairness and equity.

What is the AI acceptable use policy?

An AI acceptable use policy outlines the acceptable and unacceptable uses of artificial intelligence within an organization or community. It establishes guidelines for the ethical, responsible, and lawful use of AI technologies, addressing issues such as data privacy, algorithmic bias, transparency, accountability, and adherence to relevant regulations and standards.

What is the most important component of AI?

While all components of AI policy are important, ensuring ethical principles and values such as fairness, transparency, accountability, and human-centered design is often considered the most crucial component.

What are the different types of AI policy?

The different types of AI policy can vary depending on their scope, focus, and intended outcomes. Common types include national AI strategies, regulatory frameworks, guidelines and standards, industry codes of conduct, research ethics protocols, procurement policies, and international agreements or treaties aimed at promoting cooperation and collaboration in AI governance and development.